Recurrent Neural Networks (RNNs) have emerged as a powerful tool in the field of artificial intelligence, particularly for tasks involving sequential data. Unlike traditional neural networks, RNNs are designed to recognize patterns in sequences of data, making them ideal for applications such as natural language processing, time series prediction, and speech recognition. This article provides a comprehensive overview of RNNs, their architecture, applications, and the challenges they face.

Understanding the Architecture of RNNs

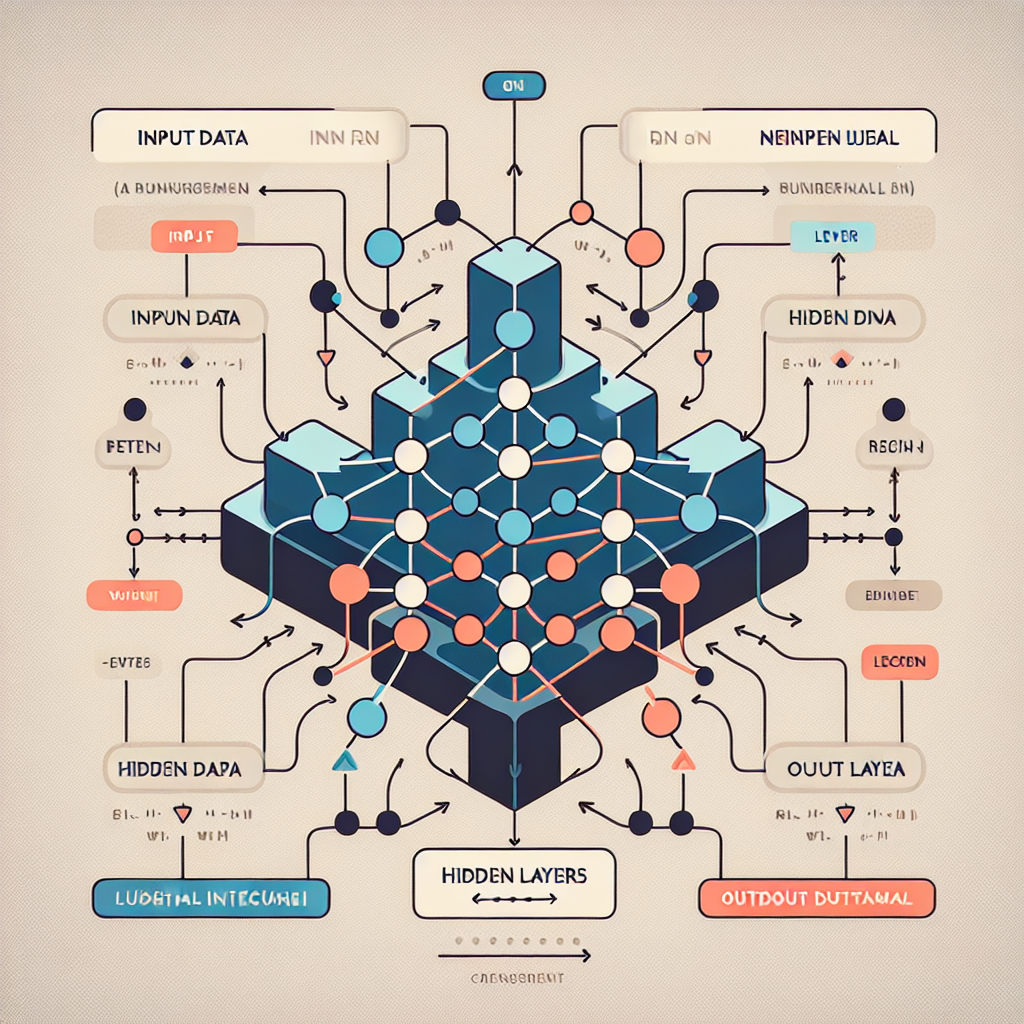

The fundamental architecture of RNNs is what sets them apart from other neural networks. RNNs have loops in their connections, allowing information to persist. This unique feature enables them to maintain a ‘memory’ of previous inputs, which is crucial for processing sequences.

- Input Layer: The input layer receives the sequential data, which can be a series of words, time steps, or any other ordered data.

- Hidden Layer: The hidden layer contains recurrent connections that allow the network to maintain a state across time steps. This state is updated at each time step based on the current input and the previous state.

- Output Layer: The output layer produces the final prediction or classification based on the processed information.

Applications of RNNs

RNNs have found applications across various domains due to their ability to handle sequential data effectively. Some notable applications include:

- Natural Language Processing (NLP): RNNs are widely used in NLP tasks such as language modeling, text generation, and machine translation. For instance, Google’s Translate service employs RNNs to translate text from one language to another.

- Speech Recognition: RNNs are instrumental in converting spoken language into text. They can process audio signals as sequences, making them suitable for applications like virtual assistants (e.g., Siri, Alexa).

- Time Series Prediction: RNNs are used in financial forecasting, weather prediction, and stock market analysis, where historical data is crucial for making future predictions.

Challenges and Limitations of RNNs

Despite their advantages, RNNs face several challenges that can hinder their performance:

- Vanishing Gradient Problem: During training, the gradients can become very small, making it difficult for the network to learn long-range dependencies. This issue is particularly pronounced in standard RNNs.

- Computational Complexity: RNNs can be computationally intensive, especially with long sequences, leading to longer training times and increased resource requirements.

- Difficulty in Parallelization: The sequential nature of RNNs makes it challenging to parallelize computations, which can slow down training and inference times.

Advancements in RNNs: LSTM and GRU

To address some of the limitations of traditional RNNs, researchers have developed advanced architectures such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRU). These architectures incorporate mechanisms to better manage the flow of information and gradients, allowing them to learn long-range dependencies more effectively.

- LSTM: LSTMs use a cell state and three gates (input, output, and forget) to control the flow of information, significantly mitigating the vanishing gradient problem.

- GRU: GRUs simplify the LSTM architecture by combining the forget and input gates into a single update gate, making them computationally more efficient while still maintaining performance.

Conclusion

Recurrent Neural Networks have revolutionized the way we process sequential data, enabling significant advancements in various fields such as NLP, speech recognition, and time series analysis. While they come with challenges like the vanishing gradient problem and computational complexity, innovations like LSTMs and GRUs have made RNNs more robust and efficient. As research continues to evolve, RNNs will likely play an even more critical role in the future of artificial intelligence, paving the way for more sophisticated applications and solutions.

“`